Documentation

¶

Documentation

¶

Overview ¶

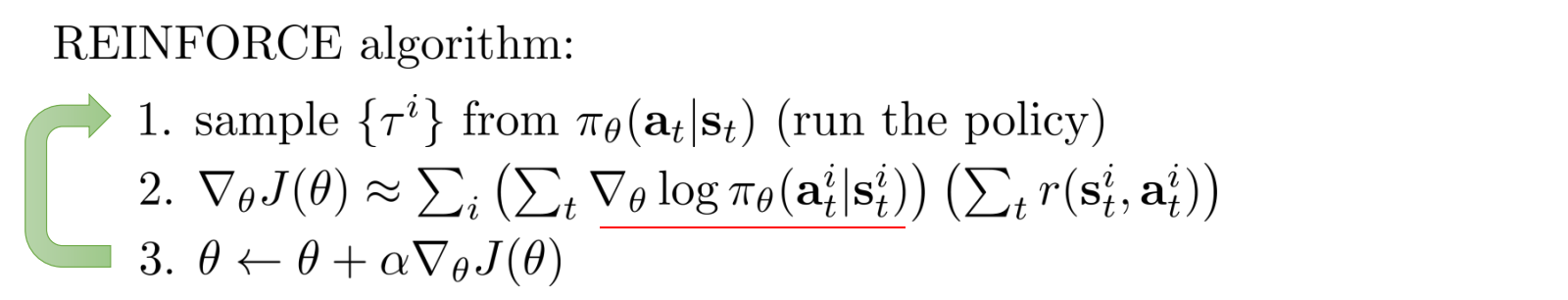

Package reinforce is an agent implementation of the REINFORCE algorithm.

Index ¶

Constants ¶

This section is empty.

Variables ¶

View Source

var DefaultAgentConfig = &AgentConfig{ Hyperparameters: DefaultHyperparameters, PolicyConfig: DefaultPolicyConfig, Base: agentv1.NewBase("REINFORCE"), }

DefaultAgentConfig is the default config for a dqn agent.

View Source

var DefaultFCLayerBuilder = func(x, y *modelv1.Input) []layer.Config { return []layer.Config{ layer.FC{Input: x.Squeeze()[0], Output: 24}, layer.FC{Input: 24, Output: 24}, layer.FC{Input: 24, Output: y.Squeeze()[0], Activation: layer.Softmax}, } }

DefaultFCLayerBuilder is a default fully connected layer builder.

View Source

var DefaultHyperparameters = &Hyperparameters{

Gamma: 0.99,

}

DefaultHyperparameters are the default hyperparameters.

View Source

var DefaultPolicyConfig = &PolicyConfig{ Optimizer: g.NewAdamSolver(), LayerBuilder: DefaultFCLayerBuilder, Track: true, }

DefaultPolicyConfig are the default hyperparameters for a policy.

Functions ¶

func MakePolicy ¶

MakePolicy makes a model.

Types ¶

type Agent ¶

type Agent struct {

// Base for the agent.

*agentv1.Base

// Hyperparameters for the dqn agent.

*Hyperparameters

// Policy by which the agent acts.

Policy model.Model

// Memory of the agent.

Memory *Memory

// contains filtered or unexported fields

}

Agent is a dqn agent.

func NewAgent ¶

func NewAgent(c *AgentConfig, env *envv1.Env) (*Agent, error)

NewAgent returns a new dqn agent.

type AgentConfig ¶

type AgentConfig struct {

// Base for the agent.

Base *agentv1.Base

// Hyperparameters for the agent.

*Hyperparameters

// PolicyConfig for the agent.

PolicyConfig *PolicyConfig

}

AgentConfig is the config for a dqn agent.

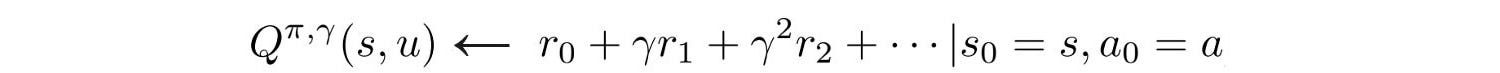

type Hyperparameters ¶

type Hyperparameters struct {

// Gamma is the discount factor (0≤γ≤1). It determines how much importance we want to give to future

// rewards. A high value for the discount factor (close to 1) captures the long-term effective award, whereas,

// a discount factor of 0 makes our agent consider only immediate reward, hence making it greedy.

Gamma float32

}

Hyperparameters for the dqn agent.

type LayerBuilder ¶

LayerBuilder builds layers.

type PolicyConfig ¶

type PolicyConfig struct {

// Optimizer to optimize the weights with regards to the error.

Optimizer g.Solver

// LayerBuilder is a builder of layer.

LayerBuilder LayerBuilder

// Track is whether to track the model.

Track bool

}

PolicyConfig are the hyperparameters for a policy.

Click to show internal directories.

Click to hide internal directories.