Documentation

¶

Documentation

¶

Index ¶

- Constants

- func EnsureEnvars(dest *Envars) error

- func GetTargetIsHealthy(o *elbv2.DescribeTargetHealthOutput, targetId *string, targetPort *int64) *string

- func LoadDefinitionsFromFiles(dir string) (*ecs.RegisterTaskDefinitionInput, *ecs.CreateServiceInput, error)

- func MergeEnvars(dest *Envars, src *Envars)

- func NewErrorf(f string, args ...interface{}) error

- func ReadAndUnmarshalJson(path string, dest interface{}) ([]byte, error)

- func ReadFileAndApplyEnvars(path string) ([]byte, error)

- type Cage

- type Envars

- type Input

- type RollOutResult

- type StartCanaryTaskOutput

- type UpResult

Constants ¶

View Source

const CanaryInstanceArnKey = "CAGE_CANARY_INSTANCE_ARN"

optional

View Source

const ClusterKey = "CAGE_CLUSTER"

required

View Source

const RegionKey = "CAGE_REGION"

View Source

const ServiceKey = "CAGE_SERVICE"

View Source

const TaskDefinitionArnKey = "CAGE_TASK_DEFINITION_ARN"

either required

Variables ¶

This section is empty.

Functions ¶

func EnsureEnvars ¶

func GetTargetIsHealthy ¶

func GetTargetIsHealthy(o *elbv2.DescribeTargetHealthOutput, targetId *string, targetPort *int64) *string

func LoadDefinitionsFromFiles ¶

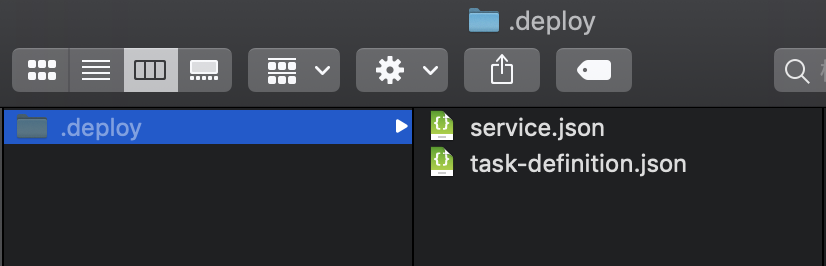

func LoadDefinitionsFromFiles(dir string) ( *ecs.RegisterTaskDefinitionInput, *ecs.CreateServiceInput, error, )

func MergeEnvars ¶

func ReadAndUnmarshalJson ¶

func ReadFileAndApplyEnvars ¶

Types ¶

type Cage ¶

type Envars ¶

type Envars struct {

Region string `json:"region" type:"string"`

Cluster string `json:"cluster" type:"string" required:"true"`

Service string `json:"service" type:"string" required:"true"`

CanaryInstanceArn string

TaskDefinitionArn string `json:"nextTaskDefinitionArn" type:"string"`

TaskDefinitionInput *ecs.RegisterTaskDefinitionInput

ServiceDefinitionInput *ecs.CreateServiceInput

// contains filtered or unexported fields

}

type RollOutResult ¶

type StartCanaryTaskOutput ¶

type StartCanaryTaskOutput struct {

// contains filtered or unexported fields

}

Directories

¶

Directories

¶

| Path | Synopsis |

|---|---|

|

cli

|

|

|

mocks

|

|

|

github.com/aws/aws-sdk-go/service/ec2/ec2iface

Package mock_ec2iface is a generated GoMock package.

|

Package mock_ec2iface is a generated GoMock package. |

|

github.com/aws/aws-sdk-go/service/ecs/ecsiface

Package mock_ecsiface is a generated GoMock package.

|

Package mock_ecsiface is a generated GoMock package. |

|

github.com/aws/aws-sdk-go/service/elbv2/elbv2iface

Package mock_elbv2iface is a generated GoMock package.

|

Package mock_elbv2iface is a generated GoMock package. |

Click to show internal directories.

Click to hide internal directories.