Documentation

¶

Documentation

¶

Overview ¶

Package hyperpb is a highly optimized dynamic message library for Protobuf or read-only workloads. It is designed to be a drop-in replacement for dynamicpb, protobuf-go's canonical solution for working with completely dynamic messages.

hyperpb's parser is an efficient VM for a special instruction set, a variant of table-driven parsing (TDP), pioneered by the UPB project.

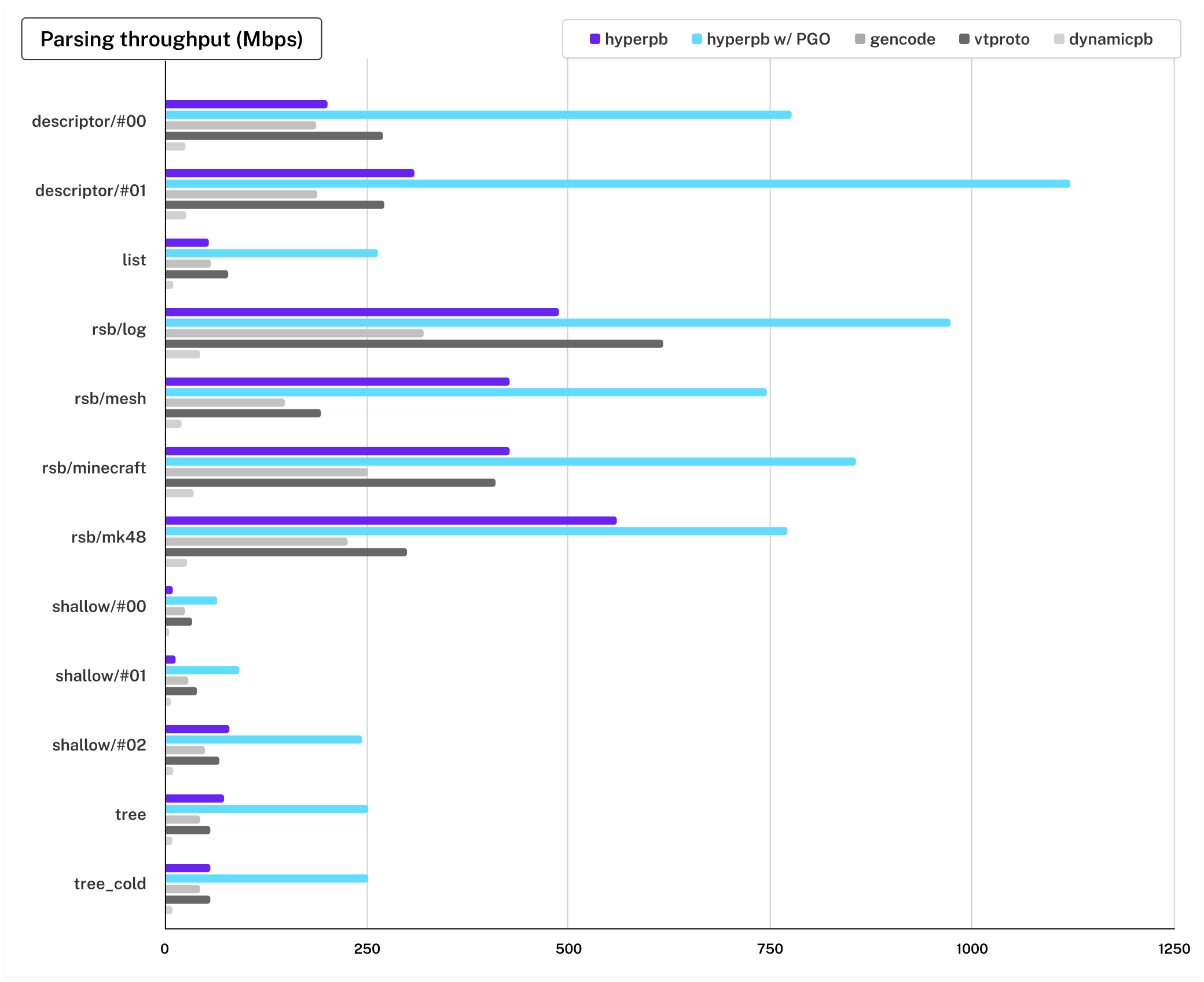

Our parser is very fast, beating dynamicpb by 10x, and often beating protobuf-go's generated code by a factor of 2-3x, especially for workloads with many nested messages.

Usage ¶

The core conceit of hyperpb is that you must pre-compile a parser using hyperpb.CompileMessageDescriptor at runtime, much like regular expressions require that you use regexp.Compile them. Doing this allows hyperpb to run optimization passes on your message, and delaying it to runtime allows us to continuously improve layout optimizations, without making any source-breaking changes.

For example, let's say that we want to compile a parser for some type baked into our binary, and parse some data with it.

For example, let's say that we want to compile a parser for some type baked into our binary, and parse some data with it.

//Compile a type for your message. Make sure to cache this!

msgType := hyperpb.CompileMessageDescriptor(

(*weatherv1.WeatherReport)(nil).ProtoReflect().Descriptor(),

)

data := /* ... */

// Allocate a fresh message using that type.

msg := hyperpb.NewMessage(msgType)

// Parse the message, using proto.Unmarshal like any other message type.

if err := proto.Unmarshal(data, msg); err != nil {

// Handle parse failure.

}

// Use reflection to read some fields. hyperpb currently only supports access

// by reflection. You can also look up fields by index using fields.Get(), which

// is less legible but doesn't hit a hashmap.

fields := msgType.Descriptor().Fields()

// Get returns a protoreflect.Value, which can be printed directly...

fmt.Println(msg.Get(fields.ByName("region")))

// ... or converted to an explicit type to operate on, such as with List(),

// which converts a repeated field into something with indexing operations.

stations := msg.Get(fields.ByName("weather_stations")).List()

for i := range stations.Len() {

// Get returns a protoreflect.Value too, so we need to convert it into

// a message to keep extracting fields.

station := stations.Get(i).Message()

fields := station.Descriptor().Fields()

// Here we extract each of the fields we care about from the message.

// Again, we could use fields.Get if we know the indices.

fmt.Println("station:", station.Get(fields.ByName("station")))

fmt.Println("frequency:", station.Get(fields.ByName("frequency")))

fmt.Println("temperature:", station.Get(fields.ByName("temperature")))

fmt.Println("pressure:", station.Get(fields.ByName("pressure")))

fmt.Println("wind_speed:", station.Get(fields.ByName("wind_speed")))

fmt.Println("conditions:", station.Get(fields.ByName("conditions")))

}

Currently, hyperpb only supports manipulating messages through the reflection API; it shines best when you need write a very generic service that downloads types off the network and parses messages using those types, which forces you to use reflection.

Mutation is currently not supported; any operation which would mutate an already-parsed message will panic. Which methods of Message panic is included in the documentation.

Memory Reuse ¶

hyperpb has a memory-reuse mechanism that side-steps the Go garbage collector for improved allocation latency. Shared is book-keeping state and resources shared by all messages resulting from the same parse. After the message goes out of scope, these resources are ordinarily reclaimed by the garbage collector.

However, a Shared can be retained after its associated message goes away, allowing for re-use. Consider the following example of a request handler:

type requestContext struct {

shared *hyperpb.Shared

types map[string]*hyperpb.MessageType

// ...

}

func (c *requestContext) Handle(req Request) {

// ...

msgType := types[req.Type]

msg := c.shared.NewMessage(msgType)

defer c.shared.Free()

c.process(msg, req, ...)

}

Beware that msg must not outlive the call to Shared.Free; failure to do so will result in memory errors that Go cannot protect you from.

Profile-Guided Optimization (PGO) ¶

`hyperpb` supports online PGO for squeezing extra performance out of the parser by optimizing the parser with knowledge of what the average message actually looks like. For example, using PGO, the parser can predict the expected size of repeated fields and allocate more intelligently.

For example, suppose you have a corpus of messages for a particular type. You can build an optimized type, using that corpus as the profile, using `Type.Recompile`:

func compilePGO(md protoreflect.MessageDescriptor, corpus [][]byte) *hyperpb.MessageType {

// Compile the type without any profiling information.

msgType := hyperpb.CompileForDescriptor(md)

// Construct a new profile recorder.

profile := msgType.NewProfile()

// Parse all of the specimens in the corpus, making sure to record a profile

// for all of them.

s := new(hyperpb.Shared)

for _, specimen := range corpus {

s.NewMessage(msgType).Unmarshal(hyperpb.RecordProfile(profile, 1.0))

s.Free()

}

// Recompile with the profile.

return msgType.Recompile(profile)

}

Compatibility ¶

hyperpb is experimental software, and the API may change drastically before v1. It currently implements all Protobuf language constructs. It does not implement mutation of parsed messages, however.

hyperpb is currently only supported on 64-bit x86 and ARM targets (Go calls these amd64 and arm64). The library will not build on other architectures, and PRs to add new architectures without a way to run tests for them in CI will be rejected.

Example ¶

Example data matches the data in the README example and should be kept in sync.

package main

import (

"fmt"

weatherv1 "buf.build/gen/go/bufbuild/hyperpb-examples/protocolbuffers/go/example/weather/v1"

"google.golang.org/protobuf/proto"

"buf.build/go/hyperpb"

"buf.build/go/hyperpb/internal/examples"

)

func main() {

// Compile a type for your message. This operation is quite slow, so it

// should be cached, like regexp.Compile.

ty := hyperpb.CompileMessageDescriptor((*weatherv1.WeatherReport)(nil).ProtoReflect().Descriptor())

data := examples.ReadWeatherData() // Read some raw Protobuf-encoded data.

// Unmarshal it, just how you normally would.

msg := hyperpb.NewMessage(ty)

if err := proto.Unmarshal(data, msg); err != nil {

panic(err)

}

// Use reflection to read some fields.

fields := ty.Descriptor().Fields()

fmt.Println(msg.Get(fields.ByName("region")))

stations := msg.Get(fields.ByName("weather_stations")).List()

for i := range stations.Len() {

station := stations.Get(i).Message()

fields := station.Descriptor().Fields()

fmt.Println("station:", station.Get(fields.ByName("station")))

fmt.Println("frequency:", station.Get(fields.ByName("frequency")))

fmt.Println("temperature:", station.Get(fields.ByName("temperature")))

fmt.Println("pressure:", station.Get(fields.ByName("pressure")))

fmt.Println("wind_speed:", station.Get(fields.ByName("wind_speed")))

fmt.Println("conditions:", station.Get(fields.ByName("conditions")))

}

}

Output: Seattle station: KAD93 frequency: 162.525 temperature: 11.3 pressure: 30.08 wind_speed: 2.3 conditions: 3 station: KHB60 frequency: 162.55 temperature: 13.7 pressure: 28.09 wind_speed: 1.9 conditions: 3

Example (Protovalidate) ¶

package main

import (

"fmt"

weatherv1 "buf.build/gen/go/bufbuild/hyperpb-examples/protocolbuffers/go/example/weather/v1"

"buf.build/go/protovalidate"

"google.golang.org/protobuf/proto"

"buf.build/go/hyperpb"

"buf.build/go/hyperpb/internal/examples"

)

func main() {

// Compile a type for your message. This operation is quite slow, so it

// should be cached, like regexp.Compile.

ty := hyperpb.CompileMessageDescriptor((*weatherv1.WeatherReport)(nil).ProtoReflect().Descriptor())

data := examples.ReadWeatherData() // Read some raw Protobuf-encoded data.

// Unmarshal it, just how you normally would.

msg := hyperpb.NewMessage(ty)

if err := proto.Unmarshal(data, msg); err != nil {

panic(err)

}

// It just works!

err := protovalidate.Validate(msg)

fmt.Println("error:", err)

}

Output: error: <nil>

Example (UnmarshalFromDescriptor) ¶

package main

import (

"fmt"

"google.golang.org/protobuf/proto"

"google.golang.org/protobuf/types/descriptorpb"

"buf.build/go/hyperpb"

"buf.build/go/hyperpb/internal/examples"

)

func main() {

// Download a descriptor off of the network, unmarshal it, and compile a

// type from it.

fds := new(descriptorpb.FileDescriptorSet)

if err := proto.Unmarshal(examples.DownloadWeatherReportSchema(), fds); err != nil {

panic(err)

}

ty, err := hyperpb.CompileFileDescriptorSet(fds,

"example.weather.v1.WeatherReport", // The type we want to compile.

)

if err != nil {

panic(err)

}

data := examples.ReadWeatherData() // Read some raw Protobuf-encoded data.

// Unmarshal it, just how you normally would.

msg := hyperpb.NewMessage(ty)

if err := proto.Unmarshal(data, msg); err != nil {

panic(err)

}

// Use reflection to read some fields.

fields := ty.Descriptor().Fields()

fmt.Println(msg.Get(fields.ByName("region")))

stations := msg.Get(fields.ByName("weather_stations")).List()

for i := range stations.Len() {

station := stations.Get(i).Message()

fields := station.Descriptor().Fields()

fmt.Println("station:", station.Get(fields.ByName("station")))

fmt.Println("frequency:", station.Get(fields.ByName("frequency")))

fmt.Println("temperature:", station.Get(fields.ByName("temperature")))

fmt.Println("pressure:", station.Get(fields.ByName("pressure")))

fmt.Println("wind_speed:", station.Get(fields.ByName("wind_speed")))

fmt.Println("conditions:", station.Get(fields.ByName("conditions")))

}

}

Output: Seattle station: KAD93 frequency: 162.525 temperature: 11.3 pressure: 30.08 wind_speed: 2.3 conditions: 3 station: KHB60 frequency: 162.55 temperature: 13.7 pressure: 28.09 wind_speed: 1.9 conditions: 3

Index ¶

- type CompileOption

- type Message

- func (m *Message) Clear(protoreflect.FieldDescriptor)

- func (m *Message) Descriptor() protoreflect.MessageDescriptor

- func (m *Message) Get(fd protoreflect.FieldDescriptor) protoreflect.Value

- func (m *Message) GetUnknown() protoreflect.RawFields

- func (m *Message) Has(fd protoreflect.FieldDescriptor) bool

- func (m *Message) HyperType() *MessageType

- func (m *Message) Initialized() error

- func (m *Message) Interface() protoreflect.ProtoMessage

- func (m *Message) IsValid() bool

- func (m *Message) Mutable(protoreflect.FieldDescriptor) protoreflect.Value

- func (m *Message) New() protoreflect.Message

- func (m *Message) NewField(protoreflect.FieldDescriptor) protoreflect.Value

- func (m *Message) ProtoMethods() *protoiface.Methods

- func (m *Message) ProtoReflect() protoreflect.Message

- func (m *Message) Range(yield func(protoreflect.FieldDescriptor, protoreflect.Value) bool)

- func (m *Message) Reset()

- func (m *Message) Set(protoreflect.FieldDescriptor, protoreflect.Value)

- func (m *Message) SetUnknown(raw protoreflect.RawFields)

- func (m *Message) Shared() *Shared

- func (m *Message) Type() protoreflect.MessageType

- func (m *Message) Unmarshal(data []byte, options ...UnmarshalOption) error

- func (m *Message) WhichOneof(od protoreflect.OneofDescriptor) protoreflect.FieldDescriptor

- type MessageType

- func (t *MessageType) Descriptor() protoreflect.MessageDescriptor

- func (t *MessageType) Format(f fmt.State, verb rune)

- func (t *MessageType) New() protoreflect.Message

- func (t *MessageType) NewProfile() *Profile

- func (t *MessageType) Recompile(profile *Profile) *MessageType

- func (t *MessageType) Zero() protoreflect.Message

- type Profile

- type Shared

- type UnmarshalOption

- func WithAllowAlias(allow bool) UnmarshalOption

- func WithAllowInvalidUTF8(allow bool) UnmarshalOption

- func WithDiscardUnknown(discard bool) UnmarshalOption

- func WithMaxDecodeMisses(maxMisses int) UnmarshalOption

- func WithMaxDepth(depth int) UnmarshalOption

- func WithRecordProfile(profile *Profile, rate float64) UnmarshalOption

Examples ¶

Constants ¶

This section is empty.

Variables ¶

This section is empty.

Functions ¶

This section is empty.

Types ¶

type CompileOption ¶

type CompileOption struct {

// contains filtered or unexported fields

}

CompileOption is a configuration setting for CompileMessageDescriptor.

func WithExtensions ¶

func WithExtensions(resolver compiler.ExtensionResolver) CompileOption

WithExtensions provides an extension resolver for a compiler.

Unlike ordinary Protobuf parsers, hyperpb does not perform extension resolution on the fly. Instead, any extensions that should be parsed must be provided up-front.

func WithExtensionsFromFiles ¶

func WithExtensionsFromFiles(files *protoregistry.Files) CompileOption

WithExtensionsFromFiles uses a file registry to provide extension information about a message type.

func WithExtensionsFromTypes ¶

func WithExtensionsFromTypes(types *protoregistry.Types) CompileOption

WithExtensionsFromTypes uses a type registry to provide extension information about a message type.

func WithProfile ¶

func WithProfile(profile *Profile) CompileOption

WithProfile provides a profile for profile-guided optimization.

Typically, you'll prefer to use MessageType.Recompile.

type Message ¶

type Message struct {

// contains filtered or unexported fields

}

Message implements protoreflect.Message.

func NewMessage ¶

func NewMessage(ty *MessageType) *Message

NewMessage allocates a new Message of the given MessageType.

See Shared.NewMessage.

func (*Message) Clear ¶

func (m *Message) Clear(protoreflect.FieldDescriptor)

Clear panics, unless this message has not been unmarshaled yet.

Clear implements protoreflect.Message.

func (*Message) Descriptor ¶

func (m *Message) Descriptor() protoreflect.MessageDescriptor

Descriptor returns message descriptor, which contains only the Protobuf type information for the message.

Descriptor implements protoreflect.Message.

func (*Message) Get ¶

func (m *Message) Get(fd protoreflect.FieldDescriptor) protoreflect.Value

Get retrieves the value for a field.

For unpopulated scalars, it returns the default value, where the default value of a bytes scalar is guaranteed to be a copy. For unpopulated composite types, it returns an empty, read-only view of the value.

Get implements protoreflect.Message.

func (*Message) GetUnknown ¶

func (m *Message) GetUnknown() protoreflect.RawFields

GetUnknown retrieves the entire list of unknown fields.

GetUnknown implements protoreflect.Message.

func (*Message) Has ¶

func (m *Message) Has(fd protoreflect.FieldDescriptor) bool

Has reports whether a field is populated.

Some fields have the property of nullability where it is possible to distinguish between the default value of a field and whether the field was explicitly populated with the default value. Singular message fields, member fields of a oneof, and proto2 scalar fields are nullable. Such fields are populated only if explicitly set.

In other cases (aside from the nullable cases above), a proto3 scalar field is populated if it contains a non-zero value, and a repeated field is populated if it is non-empty.

Has implements protoreflect.Message.

func (*Message) HyperType ¶

func (m *Message) HyperType() *MessageType

HyperType returns the MessageType for this value.

func (*Message) Initialized ¶

Initialized returns whether m contains any unset required fields.

Returns an error if any fields are not set.

func (*Message) Interface ¶

func (m *Message) Interface() protoreflect.ProtoMessage

Interface returns m.

Interface implements protoreflect.Message.

func (*Message) IsValid ¶

IsValid reports whether the message is valid.

An invalid message is an empty, read-only value.

An invalid message often corresponds to a nil pointer of the concrete message type, but the details are implementation dependent. Validity is not part of the protobuf data model, and may not be preserved in marshaling or other operations.

IsValid implements protoreflect.Message.

func (*Message) Mutable ¶

func (m *Message) Mutable(protoreflect.FieldDescriptor) protoreflect.Value

Mutable panics.

Mutable implements protoreflect.Message.

func (*Message) New ¶

func (m *Message) New() protoreflect.Message

New returns a newly allocated empty message.

New implements protoreflect.Message.

func (*Message) NewField ¶

func (m *Message) NewField(protoreflect.FieldDescriptor) protoreflect.Value

NewField panics.

NewField implements protoreflect.Message.

func (*Message) ProtoMethods ¶

func (m *Message) ProtoMethods() *protoiface.Methods

ProtoMethods returns optional fast-path implementations of various operations.

ProtoMethods implements protoreflect.Message.

func (*Message) ProtoReflect ¶

func (m *Message) ProtoReflect() protoreflect.Message

ProtoReflect implements proto.Message.

func (*Message) Range ¶

func (m *Message) Range(yield func(protoreflect.FieldDescriptor, protoreflect.Value) bool)

Range iterates over every populated field in an undefined order, calling f for each field descriptor and value encountered. Range returns immediately if f returns false.

Range implements protoreflect.Message.

func (*Message) Reset ¶

func (m *Message) Reset()

Reset panics, unless this message has not been unmarshaled yet

Implements an interface used to speed up proto.Reset. It is not part of the protoreflect.Message interface.

func (*Message) Set ¶

func (m *Message) Set(protoreflect.FieldDescriptor, protoreflect.Value)

Set panics.

Set implements protoreflect.Message.

func (*Message) SetUnknown ¶

func (m *Message) SetUnknown(raw protoreflect.RawFields)

SetUnknown panics, unless raw is zero-length, in which case it does nothing.

SetUnknown implements protoreflect.Message.

func (*Message) Type ¶

func (m *Message) Type() protoreflect.MessageType

Type returns the message type, which encapsulates both Go and Protobuf type information. If the Go type information is not needed, it is recommended that the message descriptor be used instead.

Type implements protoreflect.Message; Always returns *MessageType.

func (*Message) Unmarshal ¶

func (m *Message) Unmarshal(data []byte, options ...UnmarshalOption) error

Unmarshal is like proto.Unmarshal, but permits hyperpb-specific tuning options to be set.

Calling this function may be much faster than calling proto.Unmarshal if the message is small; proto.Unmarshal includes several nanoseconds of overhead that can become noticeable for message in the 16 byte regime.

The returned error may additionally implement a method with the signature

Offset() int

This function will return the approximate offset into data at which the error occurred.

func (*Message) WhichOneof ¶

func (m *Message) WhichOneof(od protoreflect.OneofDescriptor) protoreflect.FieldDescriptor

WhichOneof reports which field within the oneof is populated, returning nil if none are populated. It panics if the oneof descriptor does not belong to this message.

WhichOneof implements protoreflect.Message.

type MessageType ¶

type MessageType struct {

// contains filtered or unexported fields

}

MessageType implements protoreflect.MessageType.

To obtain an optimized MessageType, use any of the Compile* functions.

func CompileFileDescriptorSet ¶

func CompileFileDescriptorSet(fds *descriptorpb.FileDescriptorSet, messageName protoreflect.FullName, options ...CompileOption) (*MessageType, error)

CompileFileDescriptorSet unmarshals a google.protobuf.FileDescriptorSet from schema, looks up a message with the given name, and compiles a type for it.

func CompileMessageDescriptor ¶

func CompileMessageDescriptor(md protoreflect.MessageDescriptor, options ...CompileOption) *MessageType

CompileMessageDescriptor compiles a descriptor into a MessageType, for optimized parsing.

Panics if md is too complicated (i.e. it exceeds internal limitations for the compiler).

func (*MessageType) Descriptor ¶

func (t *MessageType) Descriptor() protoreflect.MessageDescriptor

Descriptor returns the message descriptor.

Descriptor implements protoreflect.MessageType.

func (*MessageType) Format ¶

func (t *MessageType) Format(f fmt.State, verb rune)

Format implements fmt.Formatter.

func (*MessageType) New ¶

func (t *MessageType) New() protoreflect.Message

New returns a newly allocated empty message. It may return nil for synthetic messages representing a map entry.

New implements protoreflect.MessageType.

func (*MessageType) NewProfile ¶

func (t *MessageType) NewProfile() *Profile

NewProfile creates a new profiler for this type, which can be used to profile messages of this type when unmarshaling.

The returned profiler cannot be used with messages of other types.

func (*MessageType) Recompile ¶

func (t *MessageType) Recompile(profile *Profile) *MessageType

Recompile recompiles this type with a recorded profile.

Note that this profile cannot be used with the new type; you must create a fresh profile using MessageType.NewProfile and begin recording anew.

func (*MessageType) Zero ¶

func (t *MessageType) Zero() protoreflect.Message

Zero returns an empty, read-only message. It may return nil for synthetic messages representing a map entry.

Zero implements protoreflect.MessageType.

type Profile ¶

type Profile struct {

// contains filtered or unexported fields

}

Profile can be used to profile messages associated with the same collection of types. It can later be used to re-compile the original types to be more efficient.

Profile itself is an opaque pointer; it only exists to be passed into different calls to WithProfile.

type Shared ¶

type Shared struct {

// contains filtered or unexported fields

}

Shared is state that is shared by all messages in a particular tree of messages.

The zero value is ready to use: construct it with new(Shared).

func (*Shared) Free ¶

func (s *Shared) Free()

Free releases any resources held by this value, allowing them to be re-used.

Any messages previously parsed using this value must not be reused.

func (*Shared) NewMessage ¶

func (s *Shared) NewMessage(msgType *MessageType) *Message

NewMessage allocates a new message using this value's resources.

type UnmarshalOption ¶

type UnmarshalOption struct {

// contains filtered or unexported fields

}

UnmarshalOption is a configuration setting for Message.Unmarshal.

func WithAllowAlias ¶

func WithAllowAlias(allow bool) UnmarshalOption

WithAllowAlias sets whether aliasing the input buffer is allowed. This avoids an expensive copy at the start of parsing.

Analogous to [protoimpl.UnmarshalAliasBuffer].

func WithAllowInvalidUTF8 ¶

func WithAllowInvalidUTF8(allow bool) UnmarshalOption

WithAllowInvalidUTF8 sets whether UTF-8 is validated when parsing string fields originating from non-proto2 files.

func WithDiscardUnknown ¶

func WithDiscardUnknown(discard bool) UnmarshalOption

WithDiscardUnknown sets whether unknown fields should be discarded while parsing. Analogous to proto.UnmarshalOptions.

Setting this option will break round-tripping, but will also improve parse speeds of messages with many unknown fields.

func WithMaxDecodeMisses ¶

func WithMaxDecodeMisses(maxMisses int) UnmarshalOption

WithMaxDecodeMisses sets the number of decode misses allowed in the parser before switching to the slow path.

Large values may improve performance for common protos, but introduce a potential DoS vector due to quadratic worst case performance. The default is 4.

func WithMaxDepth ¶

func WithMaxDepth(depth int) UnmarshalOption

WithMaxDepth sets the maximum recursion depth for the parser.

Setting a large value enables potential DoS vectors.

func WithRecordProfile ¶

func WithRecordProfile(profile *Profile, rate float64) UnmarshalOption

WithRecordProfile sets a profiler for an unmarshaling operation. Rate is a value from 0 to 1 that specifies the sampling rate. profile may be nil, in which case nothing will be recorded.

Profiling should be done with many, many message types, all with the same rate. This will allow the profiler to collect statistically relevant data, which can be used to recompile this type to be more efficient using MessageType.Recompile.

Directories

¶

Directories

¶

| Path | Synopsis |

|---|---|

|

internal

|

|

|

arena

Package arena provides a low-level, relatively unsafe arena allocation abstraction.

|

Package arena provides a low-level, relatively unsafe arena allocation abstraction. |

|

examples

Package examples contains helpers for making the examples in hyperpb/example_test.go easier to read.

|

Package examples contains helpers for making the examples in hyperpb/example_test.go easier to read. |

|

scc

Package scc contains an implementation of Tarjan's algorithm, which converts a directed graph into a DAG of strongly-connected components (subgraphs such that every node is reachable from every other node).

|

Package scc contains an implementation of Tarjan's algorithm, which converts a directed graph into a DAG of strongly-connected components (subgraphs such that every node is reachable from every other node). |

|

swiss

Package swiss provides arena-friendly Swisstable implementations.

|

Package swiss provides arena-friendly Swisstable implementations. |

|

tdp

Package tdp contains the "object file format" used by hyperpb's parser.

|

Package tdp contains the "object file format" used by hyperpb's parser. |

|

tdp/compiler/linker

Package linker provides a general-purpose in-memory linker, that is used to assemble the output buffer within the compiler.

|

Package linker provides a general-purpose in-memory linker, that is used to assemble the output buffer within the compiler. |

|

tdp/dynamic

Package dynamic contains the implementation of hyperpb's dynamic message types.

|

Package dynamic contains the implementation of hyperpb's dynamic message types. |

|

tdp/maps

Package maps contains shared layouts for maps field implementations, for sharing between the tdp packages and the gencode packages.

|

Package maps contains shared layouts for maps field implementations, for sharing between the tdp packages and the gencode packages. |

|

tdp/repeated

Package repeated contains shared layouts for repeated field implementations, for sharing between the tdp packages and the gencode packages.

|

Package repeated contains shared layouts for repeated field implementations, for sharing between the tdp packages and the gencode packages. |

|

tdp/thunks

Package thunks provides all thunks for the parser VM.

|

Package thunks provides all thunks for the parser VM. |

|

tdp/vm

Package vm contains the core interpreter VM for the hyperpb parser.

|

Package vm contains the core interpreter VM for the hyperpb parser. |

|

tools/hyperdump

command

hyperdump cleans up the output of go tool objdump into something readable.

|

hyperdump cleans up the output of go tool objdump into something readable. |

|

tools/hyperstencil

command

hyperstencil is a code generator for generating full specializations of generic functions.

|

hyperstencil is a code generator for generating full specializations of generic functions. |

|

tools/hypertest

command

xtest is a helper for running tests that adds a few useful features:

|

xtest is a helper for running tests that adds a few useful features: |

|

xprotoreflect

Package xprotoreflect contains helpers for working around inefficiencies in protoreflect.

|

Package xprotoreflect contains helpers for working around inefficiencies in protoreflect. |

|

xunsafe

Package unsafe provides a more convenient interface for performing unsafe operations than Go's built-in package unsafe.

|

Package unsafe provides a more convenient interface for performing unsafe operations than Go's built-in package unsafe. |

|

xunsafe/support

Package support ensures that this module is only compiled for supported architectures.

|

Package support ensures that this module is only compiled for supported architectures. |

|

zc

Package zc provides helpers for working with zero-copy ranges.

|

Package zc provides helpers for working with zero-copy ranges. |